Ethan Mollick is out with a great piece called The Bitter Lesson versus The Garbage Can. He explores a decision companies face: when to build solutions that work within their existing processes, and when to let technology forge an entirely new path. Mollick shares a story about a CEO discovering how broken his organization actually was—something we've seen play out many times in our own work:

For example, a manager walked the CEO through the map, presenting him with a view he had never seen before and illustrating for him the lack of design and the disconnect between strategy and operations. The CEO, after being walked through the map, sat down, put his head on the table, and said, "This is even more fucked up than I imagined." The CEO revealed that not only was the operation of his organization out of his control but that his grasp on it was imaginary.”

Alephic Newsletter

Our company-wide newsletter on AI, marketing, and building software.

Subscribe to receive all of our updates directly in your inbox.

The core of Mollick's argument builds on Rich Sutton's Bitter Lesson:

The lesson is bitter because it means that our human understanding of problems built from a lifetime of experience is not that important in solving a problem with AI. Decades of researchers' careful work encoding human expertise was ultimately less effective than just throwing more computation at the problem. We are soon going to see whether the Bitter Lesson applies widely to the world of work.

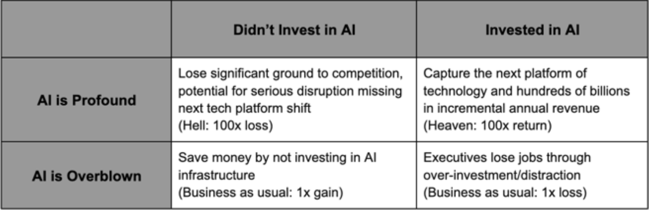

This betting-on-computation approach shows up everywhere in Silicon Valley. In one of our first articles at Alephic we wrote Pascal's Wager in AI, where we argued that today's tech giants are behaving as if the stakes of artificial intelligence are nearly infinite: if AI succeeds, the payoff is existentially large; if it fails, the sunk cost is tolerable. From the piece:

Just as Pascal argued that believing in God was a rational choice due to the potential infinite reward versus finite loss, tech companies are applying similar logic to their heavy AI investments. The wager for these hyperscalers can be broken down as follows:

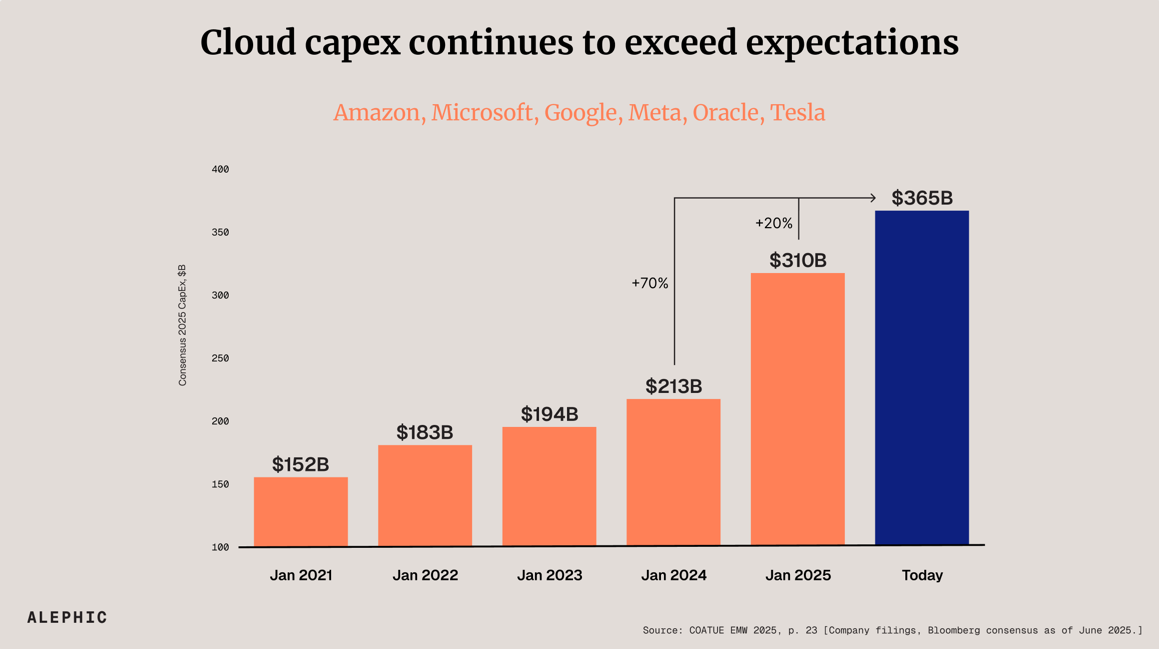

The Pascalian nature of the wager can be seen in the unprecedented capital expenditures going into compute. The biggest companies in the world are betting on the Bitter Lesson—that if you look across the history of AI, scaling general‑purpose computation has outperformed hand‑tuned domain expertise. The belief is simple: more compute → better AI. The numbers are now so staggering that the spending itself feels almost religious.

At this point you might be saying, "well, this is all fine and good, but how do I translate all this capex spend and bitter lessons with AI to how I work day-to-day in my company?"

To answer that, Mollick's contrast between two AI approaches offers a useful metaphor. Manus AI encodes existing processes into rules—much like companies that use AI to automate their current workflows. ChatGPT-style agents, by contrast, learn from outcomes—like organizations willing to let AI discover entirely new ways of working. The lesson for overstretched CEOs isn't about which AI to choose, but which mindset to adopt: stop mapping your organizational mess into code and start letting AI find cleaner paths to your goals.

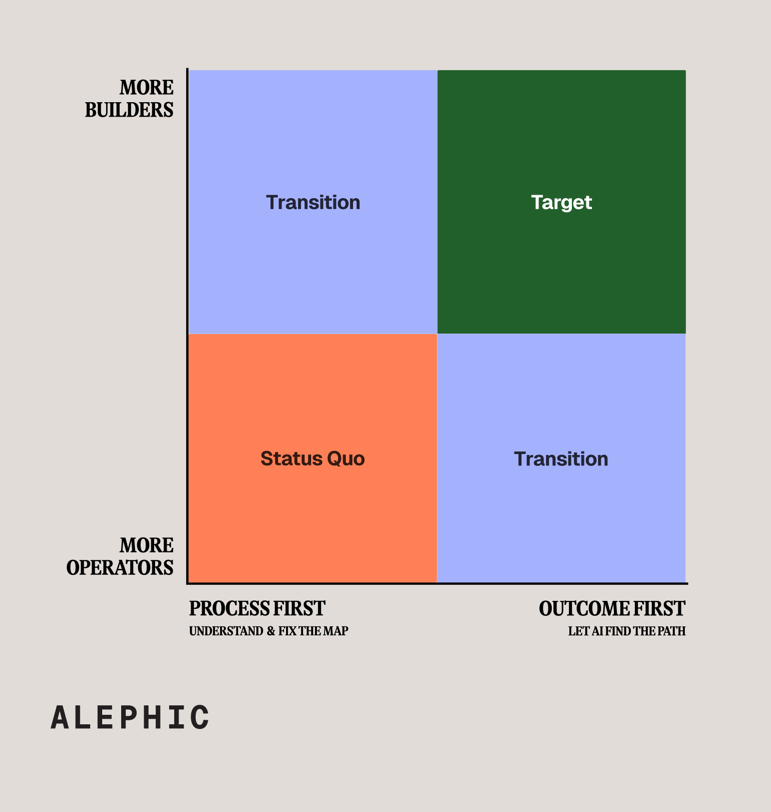

What does this mindset shift look like in practice? Like any good services company, below is a 2×2 that frames an AI initiative along two choices: who steers the work and how the work is planned. When managers chase check-lists before outcomes, you're stuck in the Status Quo (bottom-left). Shifting either dimension alone yields a Transition state: move right and managers begin targeting the KPI but can't scale without builders; move up and builders wire in AI yet still replicate yesterday's playbook. The goal is the Target quadrant (top-right) where builder-led teams start with the metric, let AI find and refine the path, and iterate in short, data-driven loops.

I see the takeaways as:

- Outcome > process. Start every AI project by articulating the metric that matters, not the steps you think will get you there.

- Builders > managers. Hire, reward, and promote people who can build with AI, not those who write decks about them.

- AI > hard coded rules. Budget for AI, and the data pipelines to feed it. Allow AI to act as the fuzzy interface, translating and absorbing the bureaucratic mess.

- Short cycles > perfect plans. Pilot quickly, measure relentlessly, and redirect resources every sprint.

- Downstream > upstream. Trillions in AI infrastructure spend will make models faster, better, and cheaper—so build where that invesment becomes your tailwind. Focus on workflows and outcomes that get more valuable as AI get better AND costs drop.

Finally, I’ll leave you with this from Mollick: “You don’t tame the garbage can by labeling the trash. You decide what ‘clean’ looks like and let machines take out the waste.”