Chain-of-Thought

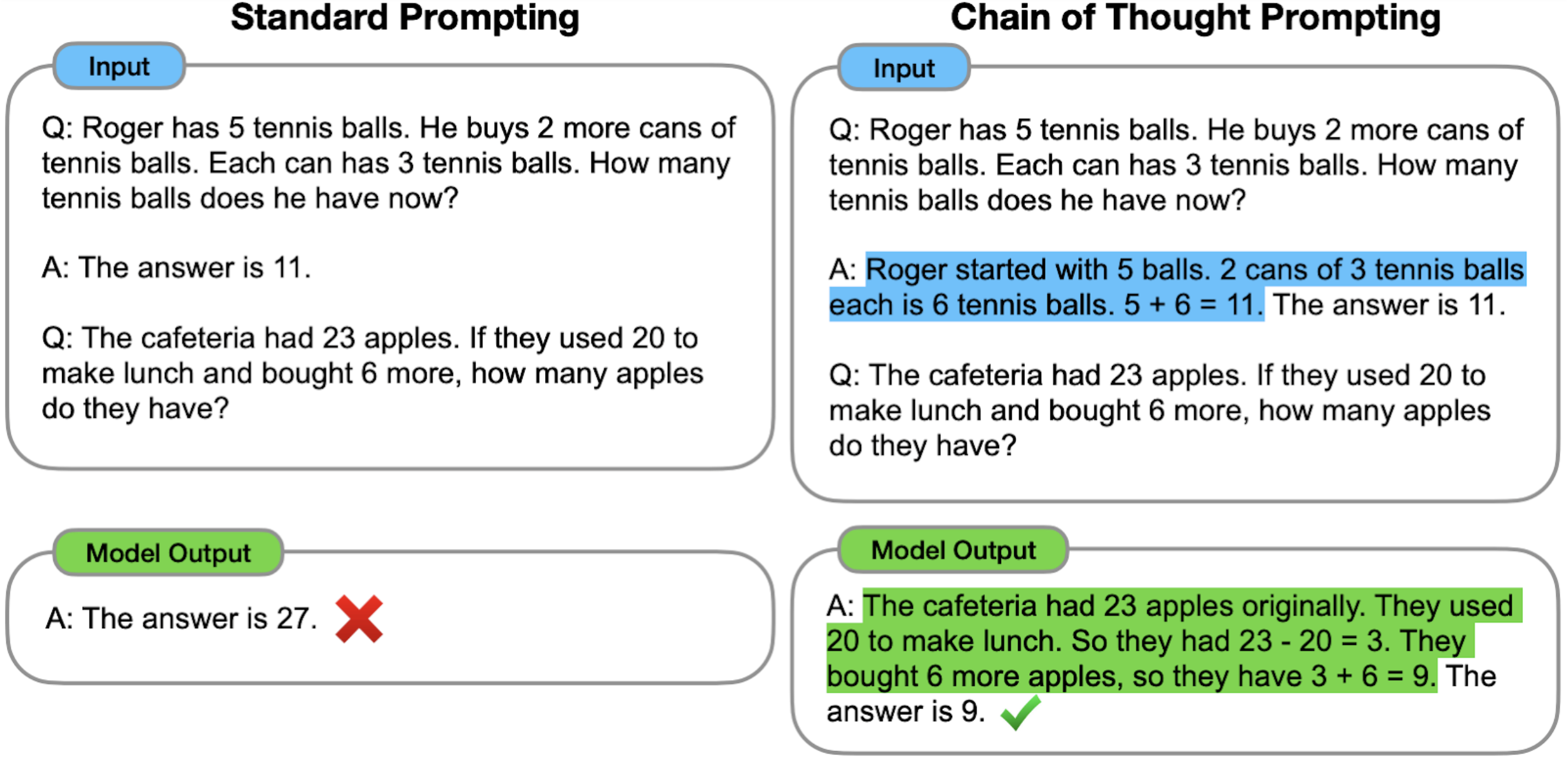

Chain-of-thought prompting transforms AI from answer machine to reasoning partner by explicitly modeling the problem-solving process within the prompt itself. Born from Google Research's 2022 breakthrough, this technique demonstrates that showing your work isn't just good practice—it fundamentally improves AI performance.

Rather than jumping to conclusions, chain-of-thought breaks complex problems into logical steps, creating a cognitive roadmap the AI can follow and extend. It's the difference between asking for directions and teaching someone to read a map.

Using chain-of-thought:

- Decompose complex queries into sequential reasoning steps

- Include intermediate calculations and logical transitions

- Make implicit thinking explicit through worked examples

The technique's elegance lies in its universality—any process that can be articulated can be enhanced. Organizations that master chain-of-thought prompting aren't just getting better outputs; they're documenting and scaling their collective intelligence.

Related terms:

Zero-Shot Prompting

Zero-shot prompting is the most basic form of AI interaction where questions are posed without any examples or guidance, relying entirely on the model’s pre-trained knowledge. This baseline approach immediately tests raw capabilities, revealing both its breadth and limitations.

Agentic AI

Agentic AI refers to systems that autonomously pursue goals—planning actions, employing tools, and adapting based on feedback—without waiting for human instructions at every step. Unlike passive AI that only responds when prompted, agentic AI can monitor systems, diagnose issues, and propose fixes on its own.

Transformer

The transformer is the neural network architecture introduced in Vaswani et al.’s “Attention Is All You Need” that replaces recurrence with parallel self-attention, enabling efficient training on internet-scale data. Its simple, scalable focus on attention powers state-of-the-art models across text, vision, protein folding, audio synthesis, and more.